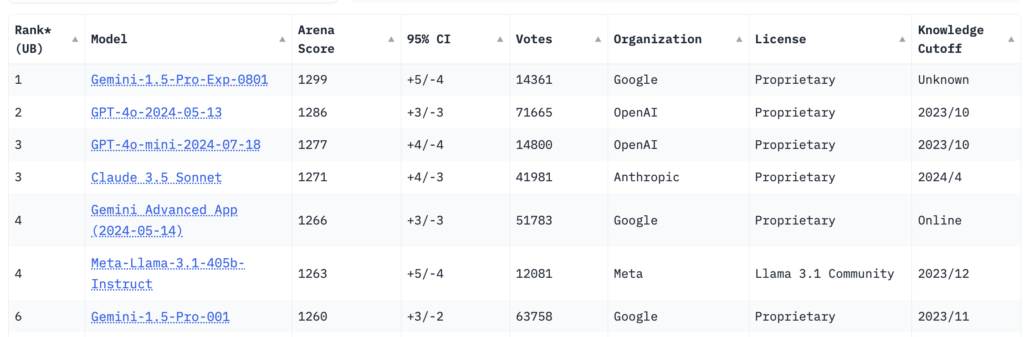

Google’s Gemini 1.5 Pro Takes the Lead in Generative AI Benchmarks

The landscape of generative AI has just gotten more interesting with Google’s experimental Gemini 1.5 Pro model surpassing OpenAI’s GPT-4o in benchmark scores. For the past year, OpenAI’s GPT-4o and Anthropic’s Claude-3 have dominated the field, but it seems like Google has finally taken the lead.

The Benchmark Scores:

One of the most widely recognized benchmarks in the AI community is the LMSYS Chatbot Arena, which evaluates models on various tasks and assigns an overall competency score. The latest scores show that:

- GPT-4o achieved a score of 1,286

- Claude-3 secured a commendable 1,271

- A previous iteration of Gemini 1.5 Pro had scored 1,261

However, the current version of Gemini 1.5 Pro has taken the top spot with an impressive score.

What This Means:

This development marks a significant milestone in the ongoing race for AI supremacy among tech giants. Google’s ability to surpass OpenAI and Anthropic in benchmark scores demonstrates the rapid pace of innovation in the field and the intense competition driving these advancements.

The Future of AI:

As the AI landscape continues to evolve, it will be interesting to see how OpenAI and Anthropic respond to this challenge from Google. Will they be able to reclaim their positions at the top of the leaderboard, or has Google established a new standard for generative AI performance? Only time will tell.